Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Humanoid robots are no longer the stuff of science fiction. Imagine a world where robots not only collaborate with us in factories but also greet us in stores, aid in surgeries and care for our loved ones. With Tesla planning to deploy thousands of Optimus robots by 2026, the age of humanoid robots is closer than we think.

This vision is becoming increasingly tangible as more companies showcase groundbreaking innovations. The 2025 Consumer Electronics Show (CES) showcased several examples of how robotics is advancing in both functionality and human-centric design. These included ADAM the robot bartender from Richtech Robotics, which mixes more than 50 types of drinks and interacts with customers, and Tombot Inc.’s puppy dogs that wag their tails and make sounds designed to comfort older adults with dementia. While there may be a market for these and other robots on display at the show, it is still early days for broad deployment of this type of robotic technology.

Nevertheless, real technological progress is being made in the field. Increasingly, this includes “humanoid” robots that use generative AI to create more human-like abilities — enabling robots to learn, sense and act in complex environments. From Optimus by Tesla to Aria from Realbotix, the next decade will see a proliferation of humanoid robots.

Despite these promising advancements, some experts caution that achieving fully human-like capabilities is still a distant goal. Citing shortcomings in current technology, Yann LeCun — one of the “Godfathers of AI” — argued recently that AI systems do not “have the capacity to plan, reason … or understand the physical world.” He added that we cannot build smart enough robots today because “we can’t get them to be smart enough.”

LeCun might be correct, although that doesn’t mean we will not soon see more humanoid robots. Elon Musk recently said that Tesla will produce several thousand Optimus units in 2025 and that he expects to ship 50,000 to 100,000 of them in 2026. That is a dramatic increase from the handful that exist today performing circumscribed functions. Of course, Musk has been known to get his timelines wrong, such as when he said in 2016 that fully autonomous driving would be achieved within two years.

Nevertheless, it seems clear that significant advances are being made with humanoid robots. Tesla is not alone in pursuing this goal, as other companies including Agility Robotics, Boston Dynamics and Figure AI are among the leaders in the humanoid robotic field.

Business Insider recently had a conversation with Agility Robotics CEO Peggy Johnson, who said it would soon be “very normal” for humanoid robots to become coworkers with humans across a variety of workplaces. Last month, Figure announced in a LinkedIn post: “We delivered F.02 humanoid robots to our commercial client, and they’re currently hard at work.” With significant backing from major investors including Microsoft and Nvidia, Figure will provide fierce competition for the humanoid robot market.

Creating a world view

LeCun did have a point, however, as more advances are required before robots have more complete human capabilities. It is simpler to move parts in a factory than to navigate dynamic, complex environments.

The current generation of robots face three key challenges: processing visual information quickly enough to react in real-time; understanding the subtle cues in human behavior; and adapting to unexpected changes in their environment. Most humanoid robots today are dependent on cloud computing and the resulting network latency can make simple tasks like picking up an object difficult.

One company working to overcome current robotics limitations is startup World Labs, founded by “AI Godmother” Fei Fei Li. Speaking with Wired, Li said: “The physical world for computers is seen through cameras, and the computer brain behind the cameras. Turning that vision into reasoning, generation and eventual interaction involves understanding the physical structure, the physical dynamics of the physical world. And that technology is called spatial intelligence.”

Gen AI powers spatial intelligence by helping robots map their surroundings in real-time, much like humans do, predicting how objects might move or change. Such advancements are crucial for creating autonomous humanoid robots capable of navigating complex, real-world scenarios with the adaptability and decision-making skills needed for success.

While spatial intelligence relies on real-time data to build mental maps of the environment, another approach is to help the humanoid robot infer the real world from a single still image. As explained in a pre-published paper, Generative World Explorer (GenEx) uses AI to create a detailed virtual world from a single image, mimicking how humans make inferences about their surroundings. While still in the research phase, this capability will help robots to make split-second decisions or navigate new environments with limited sensor data. This would allow them to quickly understand and adapt to spaces they have never experienced before.

The ChatGPT moment for robotics is coming

While World Labs and GenEx push the boundaries of AI reasoning, Nvidia’s Cosmos and GR00T are addressing the challenges of equipping humanoid robots with real-world adaptability and interactive capabilities. Cosmos is a family of AI “world foundation models” that help robots understand physics and spatial relationships, while GR00T (Generalist Robot 00 Technology) allows robots to learn by watching humans — like how an apprentice learns from a master. Together, these technologies help robots understand both what to do and how to do it naturally.

These innovations reflect a broader push in the robotics industry to equip humanoid robots with both cognitive and physical adaptability. GR00T could enable humanoid robots to help in healthcare by observing and mimicking medical professionals, while GenEx might allow robots to navigate disaster zones by inferring environments from limited visual input. As reported by Investor’s Business Daily, Nvidia CEO Jensen Huang said: “The ChatGPT moment for robotics is coming.”

Another company working to create physical AI models is Google DeepMind. Timothy Brooks, a research scientist there, posted this month on X about company plans to make large-scale gen models that simulate the physical world.

These emerging physical world models will better predict, plan and learn from experience, all fundamental capabilities for future humanoid robots.

The robots are coming

Early in 2025, humanoid robots are largely prototypes. In the near term, they will focus on specific tasks, such as manufacturing, logistics and disaster response, where automation provides immediate value. Broader applications like caregiving or retail interactions will come later, as technology matures. However, progress with AI and mechanical engineering is accelerating such humanoid robot development.

Consulting firm Accenture recently took note of the developing full stack of robotics hardware, software and AI models purpose-built for creating machine autonomy in the human world. In their “2025 Technology Vision” report, the company states: “Over the next decade, we will start to see robots casually and commonly interacting with people, reasoning their way through unplanned tasks, and independently taking actions in any kind of environment.”

Wall Street firm Morgan Stanley has estimated that the number of U.S. humanoid robots could reach eight million by 2040 and 63 million units by 2050. The company said that, in addition to technological advances, long-term demographic shifts creating labor shortages may help drive the development and their adoption.

Building trustworthy robots

Beyond the purely technical obstacles, potential societal objections must be overcome. Without addressing these concerns, public skepticism could hinder the adoption of humanoid robots, even in sectors where they offer clear benefits. To be successful, deployed humanoid robots would need to be seen as trustworthy, and people will need to believe that they help society. As noted by MIT Technology Review, “few people would feel warm and comfortable with such a robot if it walked into their living room right now.”

To address challenges with trust, researchers are exploring how to make robots appear more relatable. For instance, engineers in Japan have created a face mask from human skin cells and attached it to robots. According to a study published last summer and reported by The New York Times, the study’s lead researcher said: “Human-like faces and expressions improve communication and empathy in human-robot interactions, making robots more effective in health care, service and companionship roles.” In other words, human-like appearance will improve trust.

In addition to appearing trustworthy, human-like robots will need to consistently behave ethically and responsibly to ensure human acceptance. In public spaces, for example, humanoid robots with cameras might inadvertently collect sensitive data, such as conversations or facial details, raising concerns about surveillance. Policies ensuring transparent data practices will be critical to mitigate these risks.

The next decade

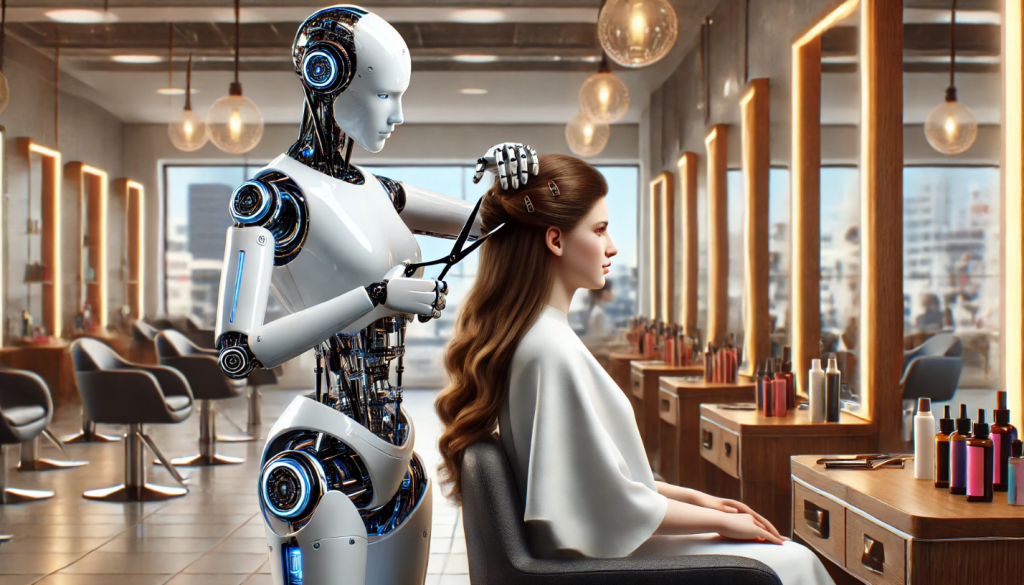

In the near term, humanoid robots will focus on specific tasks, such as manufacturing, logistics and disaster response, where automation provides immediate value. These specialized roles highlight their current strengths in structured environments while broader applications, like healthcare, caregiving and retail operations will emerge as technology matures.

As humanoid robots become more visible in daily life, their presence will profoundly impact and potentially reshape human interactions and societal norms. Beyond performing tasks, these machines will integrate into the social fabric, requiring humans to navigate new relationships with technology. Their adoption could ease labor shortages in aging societies and improve efficiency in service sectors, but may also provoke debates about job displacement, privacy and human identity in an increasingly automated world. Preparing for these shifts will demand not just technological progress, but thoughtful societal adaptation.

By addressing challenges and leveraging the efficiency and adaptability of humanoid robots, we can ensure these technologies serve as tools for progress. Shaping this future isn’t just the responsibility of policymakers and tech leaders — it is a conversation for everyone. Public participation will be essential to ensuring humanoid robots enhance society and address real human needs.

Gary Grossman is EVP of technology practice at Edelman and global lead of the Edelman AI Center of Excellence.

DataDecisionMakers

Welcome to the VentureBeat community!

DataDecisionMakers is where experts, including the technical people doing data work, can share data-related insights and innovation.

If you want to read about cutting-edge ideas and up-to-date information, best practices, and the future of data and data tech, join us at DataDecisionMakers.

You might even consider contributing an article of your own!

Read More From DataDecisionMakers

Source link