Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Leading figures in AI, including Anthropic’s Dario Amodei and OpenAI’s Sam Altman, suggest that “powerful AI” or even superintelligence could appear within the next two to 10 years, potentially reshaping our world.

In his recent essay Machines of Loving Grace, Amodei provides a thoughtful exploration of AI’s potential, suggesting that powerful AI — what others have termed artificial general intelligence (AGI) — could be achieved as early as 2026. Meanwhile, in The Intelligence Age, Altman writes that “it is possible that we will have superintelligence in a few thousand days,” (or by 2034). If they are correct, sometime in the next two to 10 years, the world will dramatically change.

As leaders in AI research and development, Amodei and Altman are at the forefront of pushing boundaries for what is possible, making their insights particularly influential as we look to the future. Amodei defines powerful AI as “smarter than a Nobel Prize winner across most relevant fields — biology, programming, math, engineering, writing…” Altman does not explicitly define superintelligence in his essay, although it is understood to be AI systems that surpass human intellectual capabilities across all domains.

Not everyone shares this optimistic timeline, although these less sanguine viewpoints have not dampened enthusiasm among tech leaders. For example, OpenAI co-founder Ilya Sutskever is now a co-founder of Safe Superintelligence (SSI), a startup dedicated to advancing AI with a safety-first approach. When announcing SSI last June, Sutskever said: “We will pursue safe superintelligence in a straight shot, with one focus, one goal and one product.” Speaking about AI advances a year ago when still at OpenAI, he noted: “It’s going to be monumental, earth-shattering. There will be a before and an after.” In his new capacity at SSI, Sutskever has already raised a billion dollars to fund company efforts.

These forecasts align with Elon Musk’s estimate that AI will outperform all of humanity by 2029. Musk recently said that AI would be able to do anything any human can do within the next year or two. He added that AI would be able to do what all humans combined can do in a further three years, in 2028 or 2029. These predictions are also consistent with the long-standing view from futurist Ray Kurzweil that AGI would be achieved by 2029. Kurzweil made this prediction as far back as 1995 and wrote about this in this best-selling 2005 book, “The Singularity Is Near.”

The imminent transformation

As we are on the brink of these potential breakthroughs, we need to assess whether we are truly ready for this transformation. Ready or not, if these predictions are right, a fundamentally new world will soon arrive.

A child born today could enter kindergarten in a world transformed by AGI. Will AI caregivers be far behind? Suddenly, the futuristic vision from Kazuo Ishiguro in “Klara and the Sun” of an android artificial friend for those children when they reach their teenage years does not seem so farfetched. The prospect of AI companions and caregivers suggests a world with profound ethical and societal shifts, one that might challenge our existing frameworks.

Beyond companions and caregivers, the implications of these technologies are unprecedented in human history, offering both revolutionary promise and existential risk. The potential upsides that could come from powerful AI are profound. Beyond robotic advances this could include developing cures for cancer and depression to finally achieving fusion energy. Some see this coming epoch as an era of abundance with people having new opportunities for creativity and connection. However, the plausible downsides are equally momentous, from vast unemployment and income inequality to runaway autonomous weapons.

In the near term, MIT Sloan principal research scientist Andrew McAfee sees AI as enhancing rather than replacing human jobs. On a recent Pivot podcast, he argued that AI provides “an army of clerks, colleagues and coaches” available on demand, even as it sometimes takes on “big chunks” of jobs.

But this measured view of AI’s impact may have an end date. Elon Musk said that in the longer term, “probably none of us will have a job.” This stark contrast highlights a crucial point: Whatever seems true about AI’s capabilities and impacts in 2024 may be radically different in the AGI world that could be just several years away.

Tempering expectations: Balancing optimism with reality

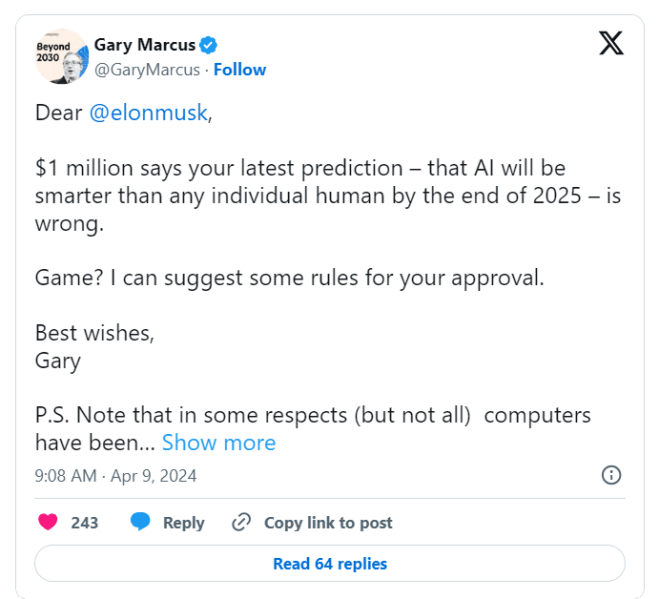

Despite these ambitious forecasts, not everyone agrees that powerful AI is on the near horizon or that its effects will be so straightforward. Deep learning skeptic Gary Marcus has been warning for some time that the current AI technologies are not capable of AGI, arguing that the technology lacks the needed deep reasoning skills. He famously took aim at Musk’s recent prediction of AI soon being smarter than any human and offered $1 million to prove him wrong.

Linus Torvalds, creator and lead developer of the Linux operating system, said recently that he thought AI would change the world but currently is “90% marketing and 10% reality.” He suggested that for now, AI may be more hype than substance.

Perhaps lending credence to Torvald’s assertion is a new paper from OpenAI that shows their leading frontier large language models (LLM) including GPT-4o and o1 struggling to answer simple questions for which there are factual answers. The paper describes a new “SimpleQA” benchmark “to measure the factuality of language models.” The best performer is o1-preview, but it produced incorrect answers to half of the questions.

Looking ahead: Readiness for the AI era

Optimistic predictions about the potential of AI contrast with the technology’s present state as shown in benchmarks like SimpleQA. These limitations suggest that while the field is progressing quickly, some significant breakthroughs are needed to achieve true AGI.

Nevertheless, those closest to the developing AI technology foresee rapid advancement. On a recent Hard Fork podcast, OpenAI’s former senior adviser for AGI readiness Miles Brundage said: “I think most people who know what they’re talking about agree [AGI] will go pretty quickly and what does that mean for society is not something that can even necessarily be predicted.” Brundage added: “I think that retirement will come for most people sooner than they think…”

Amara’s Law, coined in 1973 by Stanford’s Roy Amara, says that we often overestimate new technology’s short-term impact while underestimating its long-term potential. While AGI’s actual arrival timeline may not match the most aggressive predictions, its eventual emergence, perhaps in only a few years, could reshape society more profoundly than even today’s optimists envision.

However, the gap between current AI capabilities and true AGI is still significant. Given the stakes involved — from revolutionary medical breakthroughs to existential risks — this buffer is valuable. It offers crucial time to develop safety frameworks, adapt our institutions and prepare for a transformation that will fundamentally alter human experience. The question is not only when AGI will arrive, but also whether we will be ready for it when it does.

Gary Grossman is EVP of technology practice at Edelman and global lead of the Edelman AI Center of Excellence.

DataDecisionMakers

Welcome to the VentureBeat community!

DataDecisionMakers is where experts, including the technical people doing data work, can share data-related insights and innovation.

If you want to read about cutting-edge ideas and up-to-date information, best practices, and the future of data and data tech, join us at DataDecisionMakers.

You might even consider contributing an article of your own!

Read More From DataDecisionMakers

Source link